Bachelor project, 7th Semester

Winter ’19/’20

Neutral technology?

Neutral technology? You won’t be surprised that we look at technology from every angle during the Interactive Media studies. This is also how the following work was created. Especially in the field of artificial intelligence, the team sees a need for action in the development of such systems, especially with regard to promoting diversity and participation in this context. Primarily, it is about participation-relevant decision systems that surround us, about discrimination by those systems and how this discrimination can be avoided.

For technology is not at all neutral, as it is often perceived. It is only as good as the person who created it.

Our solution

The workshop concept developed within the framework of the bachelor’s project provides orientation and helps to create an awareness of the effects of the problem. It provides concrete tools to answer ethical questions arising in the workplace, to understand discrimination – especially if you are not affected by it yourself – and thus to broaden your perspective on diversity.

Insights

The research

There are numerous examples of discrimination by AI or algorithmic decision systems, be it that women’s voices are less well understood by speech assistants or discrimination against People of Color (PoC for short) when they are not or incorrectly perceived by face recognition software. The systems are far from perfect and it is important to address ethics, the promotion of diversity and participation in the context of development.

A situation that is not considered diverse enough manifests itself in the fact that biases occur in the algorithms of the decision systems, one-sided distortions that lead to the exclusion or neglect of groups of people because they do not cover them.

What kind of distortions are there?

Technical bias: Here, among other things, technical specifications can be responsible for the fact that groups are treated differently. These can be standardizations that do not allow certain inputs or also screens of different sizes, which means that due to the size, for example, fewer results are shown and these are of course given preferential attention compared to results that only appear on further pages.

Emergent bias: The interaction between software and application can lead to discrimination if, for example, outputs are misinterpreted or software is used in a different context for which it is not actually suitable. This bias often only becomes apparent after a while, when values or action patterns, etc. change, but the technology does not (quickly enough) follow this change.

Pre-existing bias: This is where social prejudices or values flow into the software, which can happen consciously or unconsciously.

Insights

Base of the workshop concept

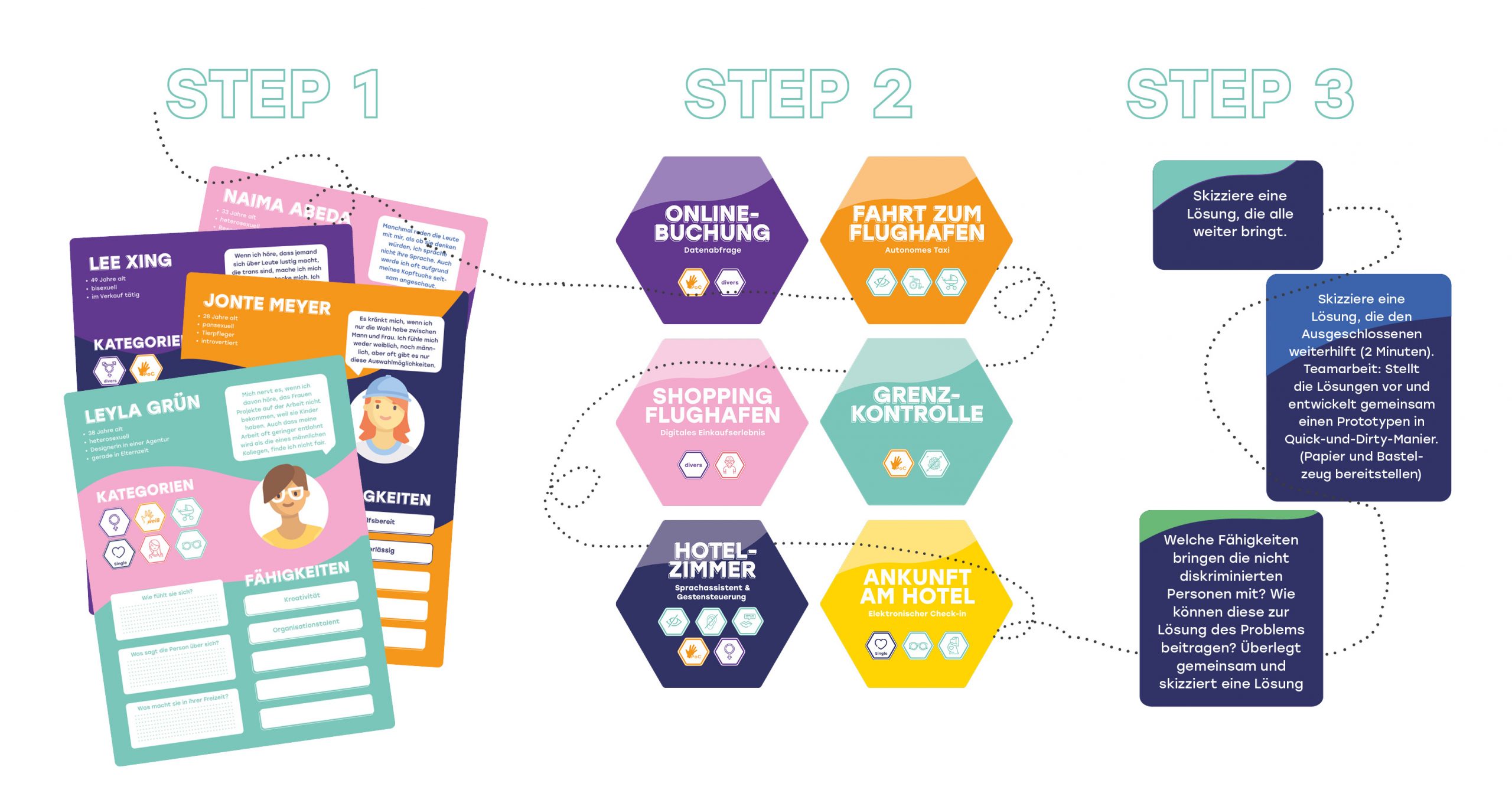

Step 1: Empathy

Personal cards give participants an insight into the situation of a certain character, they get to know him and learn how

this person experiences discrimination or disadvantage. In addition, the profession and some characteristics and skills are given. Now you put yourself in the person’s position.

Step 2: Rethinking

In the workshop, the group embarks on an imaginary journey. Various scenarios from booking to arrival at the holiday destination will take you on an exciting adventure. In which scenarios will your character be discriminated against according to the categories? How does bias arise in the system and how can it be avoided?

Step 3: Solutions

After putting oneself in the role and experiencing different perspectives, solutions are worked out from the causes that lead to biases. On the one hand, guidelines are developed which can be used as a basis for future orientation.

Still curious?

And you want to know more insights and background informations?

Just write me an email and I can show you our whole documentation.